2024年大数据最全阿里云安装Hadoop全家桶1,2024年最新别再说你不会

2024年大数据最全阿里云安装Hadoop全家桶(1),2024年最新别再说你不会

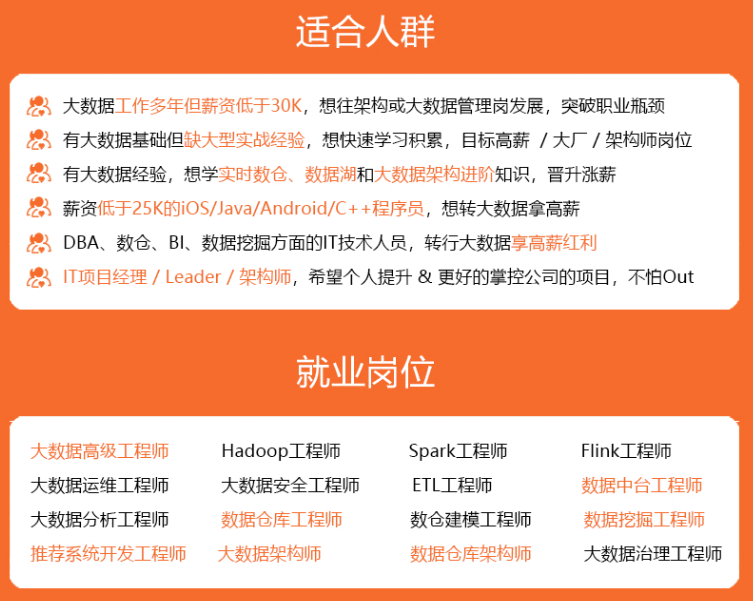

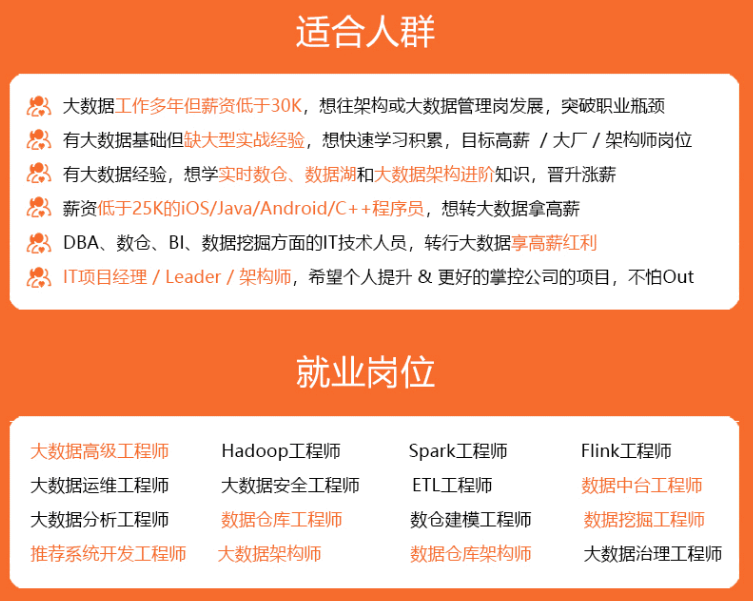

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!

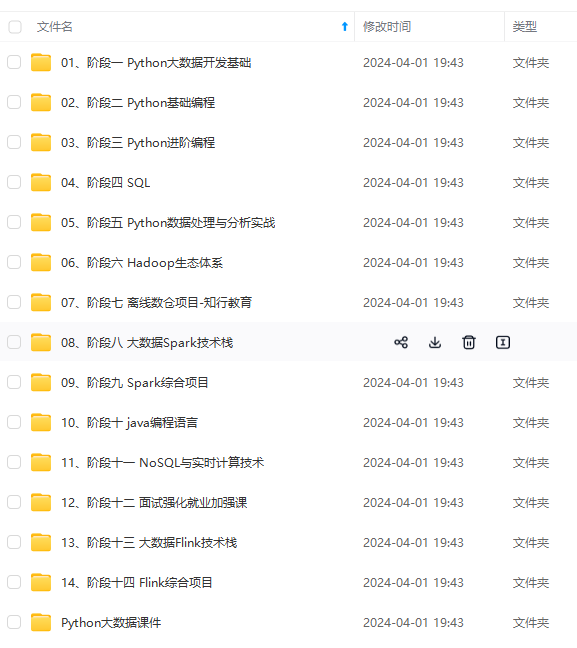

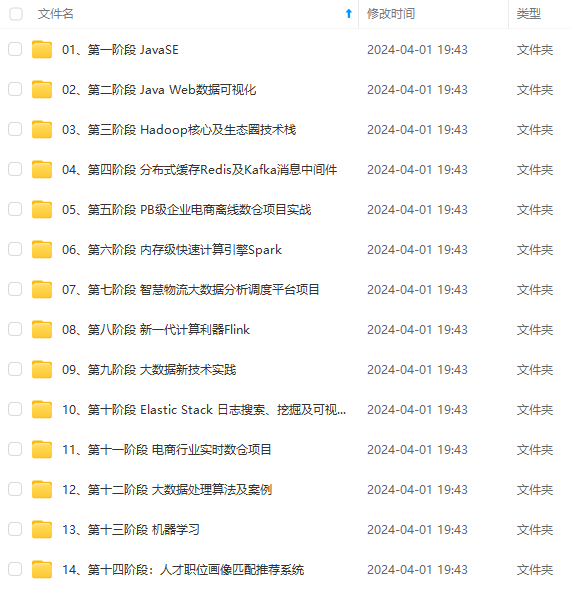

由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新

测试

配置内网ip互联

vim /etc/hosts

192.168.1.224 hadoop102 hadoop102

192.168.1.223 hadoop104 hadoop104

192.168.1.225 hadoop103 hadoop103

三台机器都需要

一个一个操作太麻烦了写一个脚本

在写脚本之前我们想要创建一个用户来管理我们后续的hadoop全家桶安装

于是创建一个hadoop用户

useradd hadoop

passwd hadoop

设置hadoop用户密码为1234切换到hadoop用户写脚本

vim xsync

#!/bin/bash

#1. 判断参数个数

if [ $# -lt 1 ]

then

echo Not Enough Arguement!

exit;

fi

#2. 遍历集群所有机器

for host in hadoop102 hadoop103 hadoop104

do

echo ==================== $host ====================

#3. 遍历所有目录,挨个发送

for file in $@

do

#4 判断文件是否存在

if [ -e $file ]

then

#5. 获取父目录

pdir=$(cd -P $(dirname $file); pwd)

#6. 获取当前文件的名称

fname=$(basename $file)

ssh $host "mkdir -p $pdir"

rsync -av $pdir/$fname $host:$pdir

else

echo $file does not exists!

fi

done

done请注意

这三个为自己的主机

给文件权限

chmod 777 xsync分发内容

xsync /etc/hosts

SSH互联

接下来就是互联按照往常的安装来说

分别是 root 和 hadoop 用户需要进行互联

这个软件有个好处就是可以批量操作

开始互联吧!

ssh-keygen -trsa -b 4096

ssh-copy-id hadoop102

ssh-copy-id hadoop103

ssh-copy-id hadoop104root 和 hadoop 用户都要操作一遍

jdk1.8

后续的安装都推荐使用hadoop用户且用hadoop102进行操作

先用root给hadoop权限

chown -R hadoop:hadoop /opt上传目录

mkdir /opt/software安装目录

mkdir /opt/module上传jdk,hadoop,zookeeper,kafka,flume

耐心等一会

现在上传完成开始进行jdk安装

tar -zxvf jdk-8u212-linux-x64.tar.gz -C /opt/module/修改名字(默认都在module操作,后续不提示)

mv jdk1.8.0_212/ jdk环境变量

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk

export PATH=$PATH:$JAVA_HOME/bin验证

分发

先不着急查看其他两台,profile还没有分发,等hadoop一起

Hadoop

解压

tar -zxvf hadoop-3.3.4.tar.gz -C /opt/module/改名

mv hadoop-3.3.4/ hadoop配置

cd hadoop/etc/hadoop/推荐使用软件自带的编辑器 xedit命令

xedit workers

hadoop102

hadoop103

hadoop104xedit hadoop-env.sh

export JAVA_HOME=/opt/module/jdk

export HADOOP_HOME=/opt/module/hadoop

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export HADOOP_LOG_DIR=$HADOOP_HOME/logs环境变量

#HADOOP_HOME

export HADOOP_HOME=/opt/module/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin分发环境

xsync /etc/proifle分发hadoop

xsync hadoop/检查java

检查hadoop

格式化

cd /opt/module/hadoop/

bin/hdfs namenode -format启动脚本

vim hdp.sh

#!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit ;

fi

case $1 in

"start")

echo " =================== 启动 hadoop集群 ==================="

echo " --------------- 启动 hdfs ---------------"

ssh hadoop102 "/opt/module/hadoop/sbin/start-dfs.sh"

echo " --------------- 启动 yarn ---------------"

ssh hadoop103 "/opt/module/hadoop/sbin/start-yarn.sh"

echo " --------------- 启动 historyserver ---------------"

ssh hadoop102 "/opt/module/hadoop/bin/mapred --daemon start historyserver"

;;

"stop")

echo " =================== 关闭 hadoop集群 ==================="

echo " --------------- 关闭 historyserver ---------------"

ssh hadoop102 "/opt/module/hadoop/bin/mapred --daemon stop historyserver"

echo " --------------- 关闭 yarn ---------------"

ssh hadoop103 "/opt/module/hadoop/sbin/stop-yarn.sh"

echo " --------------- 关闭 hdfs ---------------"

ssh hadoop102 "/opt/module/hadoop/sbin/stop-dfs.sh"

;;

*)

echo "Input Args Error..."

;;

esac

给权限

chmod 777 hdp.shjps查看脚本

vim xcall

#! /bin/bash

for i in hadoop102 hadoop103 hadoop104

do

echo --------- $i ----------

ssh $i "/opt/module/jdk/bin/jps $$*"

done

chmod 777启动与关闭

hdp.sh start

hdp.sh stop

Zookeeper

解压修改名字

tar -zxvf apache-zookeeper-3.7.1-bin.tar.gz -C /opt/module/

mv apache-zookeeper-3.7.1-bin/ zookeeper配置

配置服务器编号

cd zookeeper/

mkdir zkData

cd zkData/

vim myid

2注意编号是2

修改配置文件

cd conf/

mv zoo_sample.cfg zoo.cfg

xedit zoo.cfg

dataDir=/opt/module/zookeeper/zkData

#######################cluster##########################

server.2=hadoop102:2888:3888

server.3=hadoop103:2888:3888

server.4=hadoop104:2888:3888

或者替换配置文件内容

分发

cd /opt/moudle

xsync zookeeper/修改hadoop103,hadoop104的myid配置

hadoop103 对应3

hadoop104 对应4脚本

cd /home/hadoop/bin

vim zk.sh

#!/bin/bash

# 设置JAVA_HOME和更新PATH环境变量

export JAVA_HOME=/opt/module/jdk

export PATH=$PATH:$JAVA_HOME/bin

# 检查输入参数

if [ $# -ne 1 ]; then

echo "用法: $0 {start|stop|status}"

exit 1

fi

# 执行操作

case "$1" in

start)

echo "---------- Zookeeper 启动 ------------"

/opt/module/zookeeper/bin/zkServer.sh start

ssh hadoop103 "export JAVA_HOME=/opt/module/jdk; export PATH=\$PATH:\$JAVA_HOME/bin; /opt/module/zookeeper/bin/zkServer.sh start"

ssh hadoop104 "export JAVA_HOME=/opt/module/jdk; export PATH=\$PATH:\$JAVA_HOME/bin; /opt/module/zookeeper/bin/zkServer.sh start"

;;

stop)

echo "---------- Zookeeper 停止 ------------"

/opt/module/zookeeper/bin/zkServer.sh stop

ssh hadoop103 "export JAVA_HOME=/opt/module/jdk; export PATH=\$PATH:\$JAVA_HOME/bin; /opt/module/zookeeper/bin/zkServer.sh stop"

ssh hadoop104 "export JAVA_HOME=/opt/module/jdk; export PATH=\$PATH:\$JAVA_HOME/bin; /opt/module/zookeeper/bin/zkServer.sh stop"

;;

status)

echo "---------- Zookeeper 状态 ------------"

/opt/module/zookeeper/bin/zkServer.sh status

ssh hadoop103 "export JAVA_HOME=/opt/module/jdk; export PATH=\$PATH:\$JAVA_HOME/bin; /opt/module/zookeeper/bin/zkServer.sh status"

ssh hadoop104 "export JAVA_HOME=/opt/module/jdk; export PATH=\$PATH:\$JAVA_HOME/bin; /opt/module/zookeeper/bin/zkServer.sh status"

;;

*)

echo "未知命令: $1"

echo "用法: $0 {start|stop|status}"

exit 2

;;

esac

kafka

解压和修改名

tar -zxvf kafka_2.12-3.3.1.tgz -C /opt/module/

mv kafka_2.12-3.3.1/ kafka配置

xedit server.properties

添加

advertised.listeners=PLAINTEXT://hadoop102:9092

修改

log.dirs=/opt/module/kafka/datas

zookeeper.connect=hadoop102:2181,hadoop103:2181,hadoop104:2181/kafka环境变量

#KAFKA_HOME

export KAFKA_HOME=/opt/module/kafka

export PATH=$PATH:$KAFKA_HOME/bin记得刷新

分发

xsync kafka/修改hadoop103/104的配置文件

[hadoop@hadoop103 module]$ vim kafka/config/server.properties

修改:

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=1

#broker对外暴露的IP和端口 (每个节点单独配置)

advertised.listeners=PLAINTEXT://hadoop103:9092

[hadoop@hadoop104 module]$ vim kafka/config/server.properties

修改:

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=2

#broker对外暴露的IP和端口 (每个节点单独配置)

advertised.listeners=PLAINTEXT://hadoop104:9092脚本

请记住kakfa是在zookeeper启动下才能成功启动

vim kf.sh

#!/bin/bash

# Kafka和Zookeeper的配置

KAFKA_HOME=/opt/module/kafka

ZOOKEEPER_HOME=/opt/module/zookeeper

JAVA_HOME=/opt/module/jdk

# 定义启动Kafka的函数

start_kafka() {

echo "Starting Kafka on hadoop102..."

$KAFKA_HOME/bin/kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties

echo "Starting Kafka on hadoop104..."

ssh hadoop104 "export JAVA_HOME=$JAVA_HOME; export KAFKA_HOME=$KAFKA_HOME; $KAFKA_HOME/bin/kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties"

echo "Starting Kafka on hadoop103..."

ssh hadoop103 "export JAVA_HOME=$JAVA_HOME; export KAFKA_HOME=$KAFKA_HOME; $KAFKA_HOME/bin/kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties"

}

# 定义停止Kafka的函数

stop_kafka() {

echo "Stopping Kafka on hadoop102..."

$KAFKA_HOME/bin/kafka-server-stop.sh

echo "Stopping Kafka on hadoop104..."

ssh hadoop104 "export KAFKA_HOME=$KAFKA_HOME; $KAFKA_HOME/bin/kafka-server-stop.sh"

echo "Stopping Kafka on hadoop103..."

ssh hadoop103 "export KAFKA_HOME=$KAFKA_HOME; $KAFKA_HOME/bin/kafka-server-stop.sh"

}

# 定义检查Kafka状态的函数

check_status() {

echo "Checking Kafka status on hadoop102..."

ssh hadoop102 "jps | grep -i kafka"

echo "Checking Kafka status on hadoop104..."

ssh hadoop104 "jps | grep -i kafka"

echo "Checking Kafka status on hadoop103..."

ssh hadoop103 "jps | grep -i kafka"

}

# 处理命令行参数

case "$1" in

start)

start_kafka

;;

stop)

stop_kafka

;;

status)

check_status

;;

*)

echo "Usage: $0 {start|stop|status}"

exit 1

esac

Flume

解压和修改名

tar -zxvf apache-flume-1.10.1-bin.tar.gz -C /opt/module/

mv apache-flume-1.10.1-bin/ flume配置

vim log4j2.xml

修改

<Property name="LOG_DIR">/opt/module/flume/log</Property>

添加

<Root level="INFO">

<AppenderRef ref="LogFile" />

<AppenderRef ref="Console" />

</Root>分发

xsync flume/MySQL

MySQL下载地址 (推荐)

或者用上面的

解压下载的

上传MySQL和hive

安装MySQL

cd /opt/software/MySQL/

sh install_mysql.shroot 密码是 000000

检查登录

mysql -root -p000000

安装成功

Hive

解压和修改名

tar -zxvf hive-3.1.3.tar.gz -C /opt/module/

mv apache-hive-3.1.3-bin/ hive配置

hive-env.sh

vim hive-env.sh

export HADOOP_HOME=/opt/module/hadoop

export HIVE_CONF_DIR=/opt/module/hive/conf

export HIVE_AUX_JARS_PATH=/opt/module/hive/libhive-site.xml

vim hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!--配置Hive保存元数据信息所需的 MySQL URL地址-->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop102:3306/metastore?useSSL=false&useUnicode=true&characterEncoding=UTF-8&allowPublicKeyRetrieval=true</value>

</property>

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**

/module/hadoop

export HIVE_CONF_DIR=/opt/module/hive/conf

export HIVE_AUX_JARS_PATH=/opt/module/hive/libhive-site.xml

vim hive-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!--配置Hive保存元数据信息所需的 MySQL URL地址-->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop102:3306/metastore?useSSL=false&useUnicode=true&characterEncoding=UTF-8&allowPublicKeyRetrieval=true</value>

</property>

[外链图片转存中...(img-yC6Lkmto-1715607051199)]

[外链图片转存中...(img-XXLA924y-1715607051200)]

[外链图片转存中...(img-aHL59xhu-1715607051200)]

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上大数据知识点,真正体系化!**

**由于文件比较多,这里只是将部分目录截图出来,全套包含大厂面经、学习笔记、源码讲义、实战项目、大纲路线、讲解视频,并且后续会持续更新**

**[需要这份系统化资料的朋友,可以戳这里获取](https://bbs.csdn.net/topics/618545628)**