DETR目标检测复现

目录

DETR目标检测复现

论文地址:

代码地址:

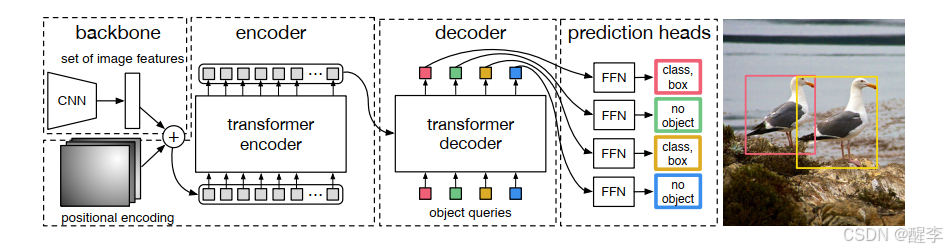

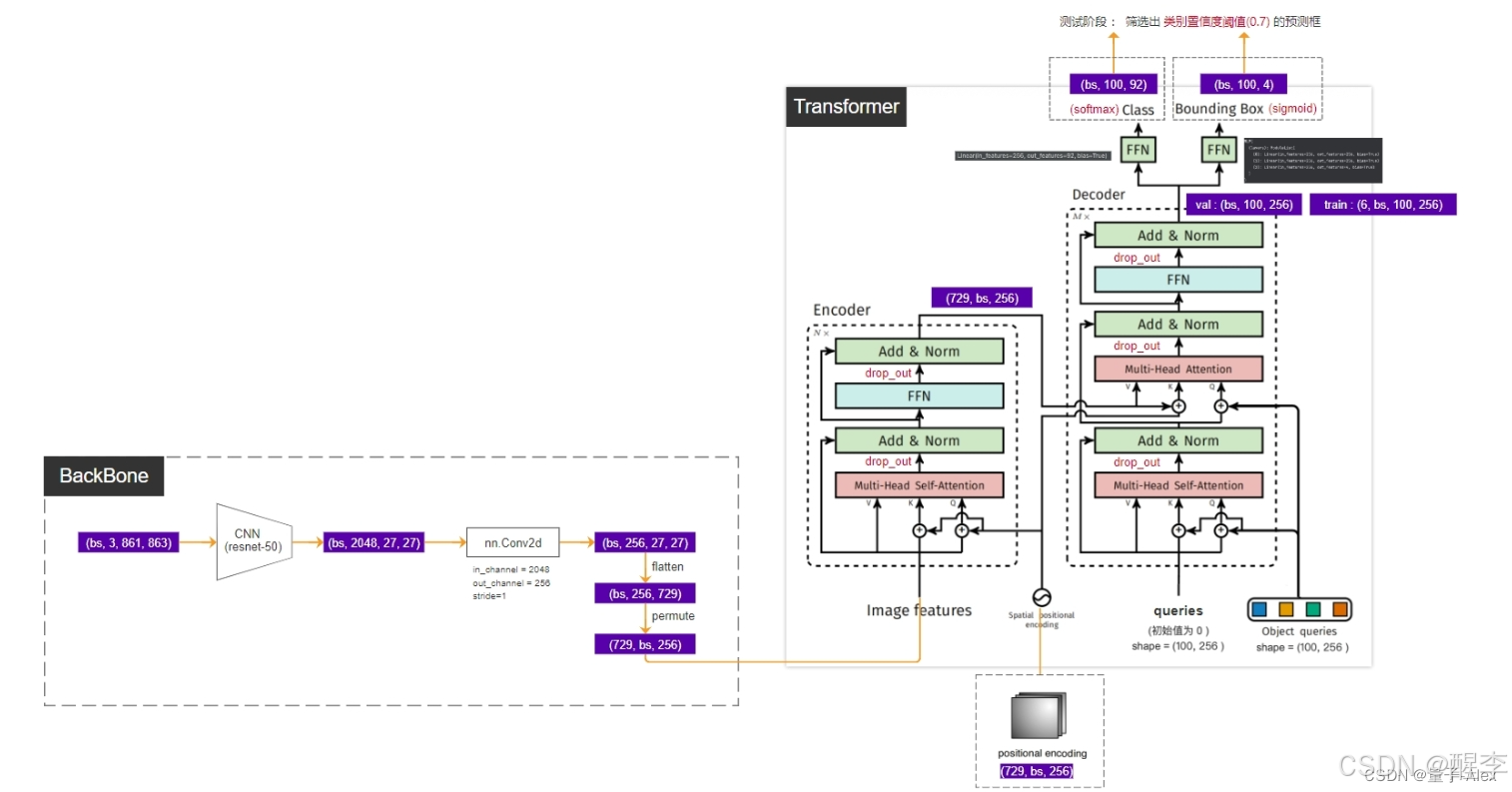

模型架构:

推理流程:

backbone: CNN提取图像特征,将图像展平

positional encoding: 实现可学习位置编码

transformer: encoder 输入特征图+位置编码(h*w, c)

输出一个全局上下文特征 (h*w, c)

decoder 输入编码器输出+一组可学习的对象查询(object queries)(n, c)

输出目标的边界框和类别 (n, c)

预测头: 一个FNN映射边框,一个FNN映射类别概率

二分图匹配:匈牙利算法

损失计算:类别损失(交叉熵)、边界框损失(L1和GIoU)

( , 侵删)

代码解读:

model

构建模型架构

backbone.py

实现骨干网络

- FrozenBatchNorm2d :冻结的批归一化层。

- BackboneBase :骨干网络的基础类。

- Backbone :具体的骨干网络实现,基于 ResNet。

- Joiner :将骨干网络的输出与位置编码(Position Encoding)结合。

- build_backbone :构建骨干网络函数。

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved

"""

Backbone modules.

"""

from collections import OrderedDict

import torch

import torch.nn.functional as F

import torchvision

from torch import nn

from torchvision.models._utils import IntermediateLayerGetter

from typing import Dict, List

from util.misc import NestedTensor, is_main_process

from .position_encoding import build_position_encoding

class FrozenBatchNorm2d(torch.nn.Module):

"""

BatchNorm2d where the batch statistics and the affine parameters are fixed.

Copy-paste from torchvision.misc.ops with added eps before rqsrt,

without which any other models than torchvision.models.resnet[18,34,50,101]

produce nans.

"""

def __init__(self, n):

super(FrozenBatchNorm2d, self).__init__()

#buffer缓冲区

self.register_buffer("weight", torch.ones(n))

self.register_buffer("bias", torch.zeros(n))

self.register_buffer("running_mean", torch.zeros(n)) #运行均值

self.register_buffer("running_var", torch.ones(n)) #运行方差

def _load_from_state_dict(self, state_dict, prefix, local_metadata, strict,

missing_keys, unexpected_keys, error_msgs):

# 删除字典中的num_batches_tracked

num_batches_tracked_key = prefix + 'num_batches_tracked'

if num_batches_tracked_key in state_dict:

del state_dict[num_batches_tracked_key]

#num_batches_tracked_key是批归一化层的计数器,用来记录已经训练过的批量数,对于冻结的批归一化,不需要了。

#调用父类的方法,完成参数加载

super(FrozenBatchNorm2d, self)._load_from_state_dict(

state_dict, prefix, local_metadata, strict,

missing_keys, unexpected_keys, error_msgs)

def forward(self, x):

# move reshapes to the beginning

# to make it fuser-friendly

w = self.weight.reshape(1, -1, 1, 1)

b = self.bias.reshape(1, -1, 1, 1)

rv = self.running_var.reshape(1, -1, 1, 1)

rm = self.running_mean.reshape(1, -1, 1, 1)

eps = 1e-5

#计算缩放和偏移,模拟BN的推理过程,但是固定统计量

scale = w * (rv + eps).rsqrt()

bias = b - rm * scale

return x * scale + bias

class BackboneBase(nn.Module):

def __init__(self, backbone: nn.Module, train_backbone: bool, num_channels: int, return_interm_layers: bool):

super().__init__()

#如果训练的话,只训练 backbone的layer2,layer3,layer4

for name, parameter in backbone.named_parameters():

if not train_backbone or 'layer2' not in name and 'layer3' not in name and 'layer4' not in name:

parameter.requires_grad_(False)

#用于从模型中指定层的输出

if return_interm_layers:

return_layers = {"layer1": "0", "layer2": "1", "layer3": "2", "layer4": "3"}

else:

return_layers = {'layer4': "0"}

self.body = IntermediateLayerGetter(backbone, return_layers=return_layers)

self.num_channels = num_channels

def forward(self, tensor_list: NestedTensor):

xs = self.body(tensor_list.tensors)

out: Dict[str, NestedTensor] = {}

for name, x in xs.items():

m = tensor_list.mask

assert m is not None

mask = F.interpolate(m[None].float(), size=x.shape[-2:]).to(torch.bool)[0]

out[name] = NestedTensor(x, mask)

return out

class Backbone(BackboneBase):

"""ResNet backbone with frozen BatchNorm."""

def __init__(self, name: str,

train_backbone: bool,

return_interm_layers: bool,

dilation: bool):

# 加载预训练模型

backbone = getattr(torchvision.models, name)(

replace_stride_with_dilation=[False, False, dilation],

#仅在主进程加载,避免分布式训练时多进程重复下载

pretrained=is_main_process(), norm_layer=FrozenBatchNorm2d)

#输出通道数

num_channels = 512 if name in ('resnet18', 'resnet34') else 2048

super().__init__(backbone, train_backbone, num_channels, return_interm_layers)

class Joiner(nn.Sequential):

def __init__(self, backbone, position_embedding):

super().__init__(backbone, position_embedding)

#将特征图和位置编码拼接起来(out,pos)

def forward(self, tensor_list: NestedTensor):

xs = self[0](tensor_list)

out: List[NestedTensor] = []

pos = []

for name, x in xs.items():

out.append(x)

# position encoding

pos.append(self[1](x).to(x.tensors.dtype))

return out, pos

def build_backbone(args):

position_embedding = build_position_encoding(args) #位置编码

train_backbone = args.lr_backbone > 0 #是否训练backbone

return_interm_layers = args.masks #是否返回中间层

backbone = Backbone(args.backbone, train_backbone, return_interm_layers, args.dilation)

model = Joiner(backbone, position_embedding)

model.num_channels = backbone.num_channels

return modelposition_encoding.py

定义两种位置编码(正弦位置编码和可学习位置编码)

二选一

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved

"""

Various positional encodings for the transformer.

"""

import math

import torch

from torch import nn

from util.misc import NestedTensor

#正弦位置编码

#适用于图像的位置编码

class PositionEmbeddingSine(nn.Module):

"""

This is a more standard version of the position embedding, very similar to the one

used by the Attention is all you need paper, generalized to work on images.

"""

def __init__(self, num_pos_feats=64, temperature=10000, normalize=False, scale=None):

super().__init__()

self.num_pos_feats = num_pos_feats

self.temperature = temperature

self.normalize = normalize

if scale is not None and normalize is False:

raise ValueError("normalize should be True if scale is passed")

if scale is None:

scale = 2 * math.pi

self.scale = scale

def forward(self, tensor_list: NestedTensor):

x = tensor_list.tensors

mask = tensor_list.mask

assert mask is not None

not_mask = ~mask

y_embed = not_mask.cumsum(1, dtype=torch.float32)

x_embed = not_mask.cumsum(2, dtype=torch.float32)

if self.normalize:

eps = 1e-6

y_embed = y_embed / (y_embed[:, -1:, :] + eps) * self.scale

x_embed = x_embed / (x_embed[:, :, -1:] + eps) * self.scale

dim_t = torch.arange(self.num_pos_feats, dtype=torch.float32, device=x.device)

dim_t = self.temperature ** (2 * (dim_t // 2) / self.num_pos_feats)

pos_x = x_embed[:, :, :, None] / dim_t

pos_y = y_embed[:, :, :, None] / dim_t

pos_x = torch.stack((pos_x[:, :, :, 0::2].sin(), pos_x[:, :, :, 1::2].cos()), dim=4).flatten(3)

pos_y = torch.stack((pos_y[:, :, :, 0::2].sin(), pos_y[:, :, :, 1::2].cos()), dim=4).flatten(3)

pos = torch.cat((pos_y, pos_x), dim=3).permute(0, 3, 1, 2)

return pos

# 可学习位置编码

#通过嵌入层,为每个位置学习固定数量的特征

class PositionEmbeddingLearned(nn.Module):

"""

Absolute pos embedding, learned.

"""

def __init__(self, num_pos_feats=256):

super().__init__()

self.row_embed = nn.Embedding(50, num_pos_feats)

self.col_embed = nn.Embedding(50, num_pos_feats)

self.reset_parameters()

def reset_parameters(self):

nn.init.uniform_(self.row_embed.weight)

nn.init.uniform_(self.col_embed.weight)

def forward(self, tensor_list: NestedTensor):

x = tensor_list.tensors

h, w = x.shape[-2:]

i = torch.arange(w, device=x.device)

j = torch.arange(h, device=x.device)

x_emb = self.col_embed(i)

y_emb = self.row_embed(j)

pos = torch.cat([

x_emb.unsqueeze(0).repeat(h, 1, 1),

y_emb.unsqueeze(1).repeat(1, w, 1),

], dim=-1).permute(2, 0, 1).unsqueeze(0).repeat(x.shape[0], 1, 1, 1)

return pos

def build_position_encoding(args):

N_steps = args.hidden_dim // 2

if args.position_embedding in ('v2', 'sine'):

# TODO find a better way of exposing other arguments

position_embedding = PositionEmbeddingSine(N_steps, normalize=True)

elif args.position_embedding in ('v3', 'learned'):

position_embedding = PositionEmbeddingLearned(N_steps)

else:

raise ValueError(f"not supported {args.position_embedding}")

return position_embeddingtransformer.py

定义transformer结构

- Transformer 类

- 整个 Transformer 模型的实现。

- TransformerEncoder 类

- Transformer 编码器的实现。

- TransformerDecoder 类

- Transformer 解码器的实现。

- TransformerEncoderLayer 类

- 单个编码器层的实现。

- TransformerDecoderLayer 类

- 单个解码器层的实现。

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved

"""

DETR Transformer class.

Copy-paste from torch.nn.Transformer with modifications:

* positional encodings are passed in MHattention

* extra LN at the end of encoder is removed

* decoder returns a stack of activations from all decoding layers

"""

import copy

from typing import Optional, List

import torch

import torch.nn.functional as F

from torch import nn, Tensor

class Transformer(nn.Module):

def __init__(self, d_model=512, nhead=8, num_encoder_layers=6,

num_decoder_layers=6, dim_feedforward=2048, dropout=0.1,

activation="relu", normalize_before=False,

return_intermediate_dec=False):

super().__init__()

encoder_layer = TransformerEncoderLayer(d_model, nhead, dim_feedforward,

dropout, activation, normalize_before)

encoder_norm = nn.LayerNorm(d_model) if normalize_before else None

self.encoder = TransformerEncoder(encoder_layer, num_encoder_layers, encoder_norm)

decoder_layer = TransformerDecoderLayer(d_model, nhead, dim_feedforward,

dropout, activation, normalize_before)

decoder_norm = nn.LayerNorm(d_model)

self.decoder = TransformerDecoder(decoder_layer, num_decoder_layers, decoder_norm,

return_intermediate=return_intermediate_dec)

self._reset_parameters()

self.d_model = d_model #特征图的维度

self.nhead = nhead #多头注意力的头数

#使用Xavier初始化模型参数

def _reset_parameters(self):

for p in self.parameters():

if p.dim() > 1:

nn.init.xavier_uniform_(p)

#特征图[batch_size,channels,height,width]

#mask掩码[batch_size,height,width]

#query_embed[num_queries, hidden_dim]

#pos_embed[batch_size,channels,height,width]

def forward(self, src, mask, query_embed, pos_embed):

# flatten NxCxHxW to HWxNxC

bs, c, h, w = src.shape

src = src.flatten(2).permute(2, 0, 1) #[height*width,batch_size,channels]

pos_embed = pos_embed.flatten(2).permute(2, 0, 1) #[height*width,batch_size,channels]

query_embed = query_embed.unsqueeze(1).repeat(1, bs, 1) #[num_queries,batch_size,hidden_dim]

mask = mask.flatten(1)

tgt = torch.zeros_like(query_embed)

memory = self.encoder(src, src_key_padding_mask=mask, pos=pos_embed)

hs = self.decoder(tgt, memory, memory_key_padding_mask=mask,

pos=pos_embed, query_pos=query_embed)

return hs.transpose(1, 2), memory.permute(1, 2, 0).view(bs, c, h, w)

class TransformerEncoder(nn.Module):

def __init__(self, encoder_layer, num_layers, norm=None):

super().__init__()

#encoder_layer是TransformerEncoderLayer类

#num_layers是TransformerEncoderLayer的个数

self.layers = _get_clones(encoder_layer, num_layers)

self.num_layers = num_layers

self.norm = norm #归一化层

def forward(self, src,

mask: Optional[Tensor] = None,

src_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None):

output = src

for layer in self.layers:

output = layer(output, src_mask=mask,

src_key_padding_mask=src_key_padding_mask, pos=pos)

if self.norm is not None:

output = self.norm(output)

return output

class TransformerDecoder(nn.Module):

def __init__(self, decoder_layer, num_layers, norm=None, return_intermediate=False):

super().__init__()

self.layers = _get_clones(decoder_layer, num_layers)

self.num_layers = num_layers

self.norm = norm

self.return_intermediate = return_intermediate

#遍历解码器层,记录中间输出,归一化

def forward(self, tgt, memory,

tgt_mask: Optional[Tensor] = None,

memory_mask: Optional[Tensor] = None,

tgt_key_padding_mask: Optional[Tensor] = None,

memory_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None,

query_pos: Optional[Tensor] = None):

#tgt[num_queries,batch_size,hidden_dim]

output = tgt

intermediate = []

#将目标张量和记忆张量依次传递给每一层解码器

for layer in self.layers:

output = layer(output, memory, tgt_mask=tgt_mask,

memory_mask=memory_mask,

tgt_key_padding_mask=tgt_key_padding_mask,

memory_key_padding_mask=memory_key_padding_mask,

pos=pos, query_pos=query_pos)

if self.return_intermediate:

intermediate.append(self.norm(output))

if self.norm is not None:

output = self.norm(output)

if self.return_intermediate:

intermediate.pop()

intermediate.append(output)

if self.return_intermediate:

return torch.stack(intermediate)

return output.unsqueeze(0) #[1,num_queries,batch_size,hidden_dim]

#实现单个编码器层,自注意力和前馈

class TransformerEncoderLayer(nn.Module):

def __init__(self, d_model, nhead, dim_feedforward=2048, dropout=0.1,

activation="relu", normalize_before=False):

super().__init__()

self.self_attn = nn.MultiheadAttention(d_model, nhead, dropout=dropout)

# Implementation of Feedforward model

self.linear1 = nn.Linear(d_model, dim_feedforward)

self.dropout = nn.Dropout(dropout)

self.linear2 = nn.Linear(dim_feedforward, d_model)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.dropout1 = nn.Dropout(dropout)

self.dropout2 = nn.Dropout(dropout)

self.activation = _get_activation_fn(activation)

self.normalize_before = normalize_before

def with_pos_embed(self, tensor, pos: Optional[Tensor]):

return tensor if pos is None else tensor + pos

#先应用自注意力机制和前馈网络再归一化

def forward_post(self,

src,

src_mask: Optional[Tensor] = None,

src_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None):

q = k = self.with_pos_embed(src, pos)

src2 = self.self_attn(q, k, value=src, attn_mask=src_mask,

key_padding_mask=src_key_padding_mask)[0]

src = src + self.dropout1(src2)

src = self.norm1(src)

src2 = self.linear2(self.dropout(self.activation(self.linear1(src))))

src = src + self.dropout2(src2)

src = self.norm2(src)

return src

#先归一化再应用自注意力机制和前馈网络

def forward_pre(self, src,

src_mask: Optional[Tensor] = None,

src_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None):

src2 = self.norm1(src)

q = k = self.with_pos_embed(src2, pos)

src2 = self.self_attn(q, k, value=src2, attn_mask=src_mask,

key_padding_mask=src_key_padding_mask)[0]

src = src + self.dropout1(src2)

src2 = self.norm2(src)

src2 = self.linear2(self.dropout(self.activation(self.linear1(src2))))

src = src + self.dropout2(src2)

return src

def forward(self, src,

src_mask: Optional[Tensor] = None,

src_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None):

if self.normalize_before: #选择合适的前向传播

return self.forward_pre(src, src_mask, src_key_padding_mask, pos)

return self.forward_post(src, src_mask, src_key_padding_mask, pos)

class TransformerDecoderLayer(nn.Module):

def __init__(self, d_model, nhead, dim_feedforward=2048, dropout=0.1,

activation="relu", normalize_before=False):

super().__init__()

self.self_attn = nn.MultiheadAttention(d_model, nhead, dropout=dropout)

self.multihead_attn = nn.MultiheadAttention(d_model, nhead, dropout=dropout)

# Implementation of Feedforward model

self.linear1 = nn.Linear(d_model, dim_feedforward)

self.dropout = nn.Dropout(dropout)

self.linear2 = nn.Linear(dim_feedforward, d_model)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.norm3 = nn.LayerNorm(d_model)

self.dropout1 = nn.Dropout(dropout)

self.dropout2 = nn.Dropout(dropout)

self.dropout3 = nn.Dropout(dropout)

self.activation = _get_activation_fn(activation)

self.normalize_before = normalize_before

def with_pos_embed(self, tensor, pos: Optional[Tensor]):

return tensor if pos is None else tensor + pos

def forward_post(self, tgt, memory,

tgt_mask: Optional[Tensor] = None,

memory_mask: Optional[Tensor] = None,

tgt_key_padding_mask: Optional[Tensor] = None,

memory_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None,

query_pos: Optional[Tensor] = None):

q = k = self.with_pos_embed(tgt, query_pos)

tgt2 = self.self_attn(q, k, value=tgt, attn_mask=tgt_mask,

key_padding_mask=tgt_key_padding_mask)[0]

tgt = tgt + self.dropout1(tgt2)

tgt = self.norm1(tgt)

tgt2 = self.multihead_attn(query=self.with_pos_embed(tgt, query_pos),

key=self.with_pos_embed(memory, pos),

value=memory, attn_mask=memory_mask,

key_padding_mask=memory_key_padding_mask)[0]

tgt = tgt + self.dropout2(tgt2)

tgt = self.norm2(tgt)

tgt2 = self.linear2(self.dropout(self.activation(self.linear1(tgt))))

tgt = tgt + self.dropout3(tgt2)

tgt = self.norm3(tgt)

return tgt

def forward_pre(self, tgt, memory,

tgt_mask: Optional[Tensor] = None,

memory_mask: Optional[Tensor] = None,

tgt_key_padding_mask: Optional[Tensor] = None,

memory_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None,

query_pos: Optional[Tensor] = None):

tgt2 = self.norm1(tgt)

q = k = self.with_pos_embed(tgt2, query_pos)

tgt2 = self.self_attn(q, k, value=tgt2, attn_mask=tgt_mask,

key_padding_mask=tgt_key_padding_mask)[0]

tgt = tgt + self.dropout1(tgt2)

tgt2 = self.norm2(tgt)

tgt2 = self.multihead_attn(query=self.with_pos_embed(tgt2, query_pos),

key=self.with_pos_embed(memory, pos),

value=memory, attn_mask=memory_mask,

key_padding_mask=memory_key_padding_mask)[0]

tgt = tgt + self.dropout2(tgt2)

tgt2 = self.norm3(tgt)

tgt2 = self.linear2(self.dropout(self.activation(self.linear1(tgt2))))

tgt = tgt + self.dropout3(tgt2)

return tgt

def forward(self, tgt, memory,

tgt_mask: Optional[Tensor] = None,

memory_mask: Optional[Tensor] = None,

tgt_key_padding_mask: Optional[Tensor] = None,

memory_key_padding_mask: Optional[Tensor] = None,

pos: Optional[Tensor] = None,

query_pos: Optional[Tensor] = None):

if self.normalize_before:

return self.forward_pre(tgt, memory, tgt_mask, memory_mask,

tgt_key_padding_mask, memory_key_padding_mask, pos, query_pos)

return self.forward_post(tgt, memory, tgt_mask, memory_mask,

tgt_key_padding_mask, memory_key_padding_mask, pos, query_pos)

def _get_clones(module, N):

return nn.ModuleList([copy.deepcopy(module) for i in range(N)])

def build_transformer(args):

return Transformer(

d_model=args.hidden_dim,

dropout=args.dropout,

nhead=args.nheads,

dim_feedforward=args.dim_feedforward,

num_encoder_layers=args.enc_layers,

num_decoder_layers=args.dec_layers,

normalize_before=args.pre_norm,

return_intermediate_dec=True,

)

def _get_activation_fn(activation):

"""Return an activation function given a string"""

if activation == "relu":

return F.relu

if activation == "gelu":

return F.gelu

if activation == "glu":

return F.glu

raise RuntimeError(F"activation should be relu/gelu, not {activation}.")matcher.py

定义匈牙利算法进行二分匹配

- HungarianMatcher 类

- 计算匹配成本并使用匈牙利算法求解最佳匹配。

- build_matcher 函数

- 根据命令行参数构建 HungarianMatcher 实例。

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved

"""

Modules to compute the matching cost and solve the corresponding LSAP.

"""

import torch

from scipy.optimize import linear_sum_assignment

from torch import nn

from util.box_ops import box_cxcywh_to_xyxy, generalized_box_iou

class HungarianMatcher(nn.Module):

"""This class computes an assignment between the targets and the predictions of the network

For efficiency reasons, the targets don't include the no_object. Because of this, in general,

there are more predictions than targets. In this case, we do a 1-to-1 matching of the best predictions,

while the others are un-matched (and thus treated as non-objects).

"""

def __init__(self, cost_class: float = 1, cost_bbox: float = 1, cost_giou: float = 1):

"""Creates the matcher

Params:

cost_class: This is the relative weight of the classification error in the matching cost

cost_bbox: This is the relative weight of the L1 error of the bounding box coordinates in the matching cost

cost_giou: This is the relative weight of the giou loss of the bounding box in the matching cost

"""

super().__init__()

self.cost_class = cost_class #匹配成本中分类错误的权重

self.cost_bbox = cost_bbox #边界框坐标L1误差的权重

self.cost_giou = cost_giou #边界框GIoU损失的权重

assert cost_class != 0 or cost_bbox != 0 or cost_giou != 0, "all costs cant be 0"

#计算三种cost, 组合成最终的cost矩阵,利用匈牙利算法求解最佳匹配。

@torch.no_grad()

def forward(self, outputs, targets):

""" Performs the matching

Params:

outputs: This is a dict that contains at least these entries:

"pred_logits": Tensor of dim [batch_size, num_queries, num_classes] with the classification logits

"pred_boxes": Tensor of dim [batch_size, num_queries, 4] with the predicted box coordinates

targets: This is a list of targets (len(targets) = batch_size), where each target is a dict containing:

"labels": Tensor of dim [num_target_boxes] (where num_target_boxes is the number of ground-truth

objects in the target) containing the class labels

"boxes": Tensor of dim [num_target_boxes, 4] containing the target box coordinates

Returns:

A list of size batch_size, containing tuples of (index_i, index_j) where:

- index_i is the indices of the selected predictions (in order)

- index_j is the indices of the corresponding selected targets (in order)

For each batch element, it holds:

len(index_i) = len(index_j) = min(num_queries, num_target_boxes)

"""

bs, num_queries = outputs["pred_logits"].shape[:2]

# We flatten to compute the cost matrices in a batch

out_prob = outputs["pred_logits"].flatten(0, 1).softmax(-1) # [batch_size * num_queries, num_classes]

out_bbox = outputs["pred_boxes"].flatten(0, 1) # [batch_size * num_queries, 4]

# Also concat the target labels and boxes

tgt_ids = torch.cat([v["labels"] for v in targets])

tgt_bbox = torch.cat([v["boxes"] for v in targets])

# Compute the classification cost. Contrary to the loss, we don't use the NLL,

# but approximate it in 1 - proba[target class].

# The 1 is a constant that doesn't change the matching, it can be ommitted.

cost_class = -out_prob[:, tgt_ids]

# Compute the L1 cost between boxes

cost_bbox = torch.cdist(out_bbox, tgt_bbox, p=1)

# Compute the giou cost betwen boxes

cost_giou = -generalized_box_iou(box_cxcywh_to_xyxy(out_bbox), box_cxcywh_to_xyxy(tgt_bbox))

# Final cost matrix

C = self.cost_bbox * cost_bbox + self.cost_class * cost_class + self.cost_giou * cost_giou

C = C.view(bs, num_queries, -1).cpu()

sizes = [len(v["boxes"]) for v in targets]

indices = [linear_sum_assignment(c[i]) for i, c in enumerate(C.split(sizes, -1))]

return [(torch.as_tensor(i, dtype=torch.int64), torch.as_tensor(j, dtype=torch.int64)) for i, j in indices]

def build_matcher(args):

return HungarianMatcher(cost_class=args.set_cost_class, cost_bbox=args.set_cost_bbox, cost_giou=args.set_cost_giou)detr.py

定义模型结构、损失计算等等

- DETR 类

- 定义了 模型的主类,用于执行目标检测任务。

- SetCriterion 类

- 定义了 损失计算模块,用于监督分类和边界框回归。

- PostProcess 类

- 定义了将模型输出转换为 COCO API 格式的后处理模块。

- MLP 类

- 定义了一个简单的FNN,用于生成边界框坐标。

- build 函数

- 构建 DETR 模型、损失计算模块和后处理(分割会用)模块。

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved """ DETR model and criterion classes. """ import torch import torch.nn.functional as F from torch import nn from util import box_ops from util.misc import (NestedTensor, nested_tensor_from_tensor_list, accuracy, get_world_size, interpolate, is_dist_avail_and_initialized) from .backbone import build_backbone from .matcher import build_matcher from .segmentation import (DETRsegm, PostProcessPanoptic, PostProcessSegm, dice_loss, sigmoid_focal_loss) from .transformer import build_transformer class DETR(nn.Module): """ This is the DETR module that performs object detection """ def __init__(self, backbone, transformer, num_classes, num_queries, aux_loss=False): """ Initializes the model. Parameters: backbone: torch module of the backbone to be used. See backbone.py transformer: torch module of the transformer architecture. See transformer.py num_classes: number of object classes num_queries: number of object queries, ie detection slot. This is the maximal number of objects DETR can detect in a single image. For COCO, we recommend 100 queries. aux_loss: True if auxiliary decoding losses (loss at each decoder layer) are to be used. """ super().__init__() self.num_queries = num_queries self.transformer = transformer hidden_dim = transformer.d_model self.class_embed = nn.Linear(hidden_dim, num_classes + 1) self.bbox_embed = MLP(hidden_dim, hidden_dim, 4, 3) self.query_embed = nn.Embedding(num_queries, hidden_dim) self.input_proj = nn.Conv2d(backbone.num_channels, hidden_dim, kernel_size=1) self.backbone = backbone self.aux_loss = aux_loss def forward(self, samples: NestedTensor): """ The forward expects a NestedTensor, which consists of: - samples.tensor: batched images, of shape [batch_size x 3 x H x W] - samples.mask: a binary mask of shape [batch_size x H x W], containing 1 on padded pixels It returns a dict with the following elements: - "pred_logits": the classification logits (including no-object) for all queries. Shape= [batch_size x num_queries x (num_classes + 1)] - "pred_boxes": The normalized boxes coordinates for all queries, represented as (center_x, center_y, height, width). These values are normalized in [0, 1], relative to the size of each individual image (disregarding possible padding). See PostProcess for information on how to retrieve the unnormalized bounding box. - "aux_outputs": Optional, only returned when auxilary losses are activated. It is a list of dictionnaries containing the two above keys for each decoder layer. """ if isinstance(samples, (list, torch.Tensor)): samples = nested_tensor_from_tensor_list(samples) features, pos = self.backbone(samples) src, mask = features[-1].decompose() assert mask is not None hs = self.transformer(self.input_proj(src), mask, self.query_embed.weight, pos[-1])[0] outputs_class = self.class_embed(hs) outputs_coord = self.bbox_embed(hs).sigmoid() out = {'pred_logits': outputs_class[-1], 'pred_boxes': outputs_coord[-1]} if self.aux_loss: out['aux_outputs'] = self._set_aux_loss(outputs_class, outputs_coord) return out @torch.jit.unused def _set_aux_loss(self, outputs_class, outputs_coord): # this is a workaround to make torchscript happy, as torchscript # doesn't support dictionary with non-homogeneous values, such # as a dict having both a Tensor and a list. return [{'pred_logits': a, 'pred_boxes': b} for a, b in zip(outputs_class[:-1], outputs_coord[:-1])] class SetCriterion(nn.Module): """ This class computes the loss for DETR. The process happens in two steps: 1) we compute hungarian assignment between ground truth boxes and the outputs of the model 2) we supervise each pair of matched ground-truth / prediction (supervise class and box) """ def __init__(self, num_classes, matcher, weight_dict, eos_coef, losses): """ Create the criterion. Parameters: num_classes: number of object categories, omitting the special no-object category matcher: module able to compute a matching between targets and proposals weight_dict: dict containing as key the names of the losses and as values their relative weight. eos_coef: relative classification weight applied to the no-object category losses: list of all the losses to be applied. See get_loss for list of available losses. """ super().__init__() self.num_classes = num_classes self.matcher = matcher self.weight_dict = weight_dict self.eos_coef = eos_coef self.losses = losses empty_weight = torch.ones(self.num_classes + 1) empty_weight[-1] = self.eos_coef self.register_buffer('empty_weight', empty_weight) def loss_labels(self, outputs, targets, indices, num_boxes, log=True): """Classification loss (NLL) targets dicts must contain the key "labels" containing a tensor of dim [nb_target_boxes] """ assert 'pred_logits' in outputs src_logits = outputs['pred_logits'] idx = self._get_src_permutation_idx(indices) target_classes_o = torch.cat([t["labels"][J] for t, (_, J) in zip(targets, indices)]) target_classes = torch.full(src_logits.shape[:2], self.num_classes, dtype=torch.int64, device=src_logits.device) target_classes[idx] = target_classes_o loss_ce = F.cross_entropy(src_logits.transpose(1, 2), target_classes, self.empty_weight) losses = {'loss_ce': loss_ce} if log: # TODO this should probably be a separate loss, not hacked in this one here losses['class_error'] = 100 - accuracy(src_logits[idx], target_classes_o)[0] return losses @torch.no_grad() def loss_cardinality(self, outputs, targets, indices, num_boxes): """ Compute the cardinality error, ie the absolute error in the number of predicted non-empty boxes This is not really a loss, it is intended for logging purposes only. It doesn't propagate gradients """ pred_logits = outputs['pred_logits'] device = pred_logits.device tgt_lengths = torch.as_tensor([len(v["labels"]) for v in targets], device=device) # Count the number of predictions that are NOT "no-object" (which is the last class) card_pred = (pred_logits.argmax(-1) != pred_logits.shape[-1] - 1).sum(1) card_err = F.l1_loss(card_pred.float(), tgt_lengths.float()) losses = {'cardinality_error': card_err} return losses def loss_boxes(self, outputs, targets, indices, num_boxes): """Compute the losses related to the bounding boxes, the L1 regression loss and the GIoU loss targets dicts must contain the key "boxes" containing a tensor of dim [nb_target_boxes, 4] The target boxes are expected in format (center_x, center_y, w, h), normalized by the image size. """ assert 'pred_boxes' in outputs idx = self._get_src_permutation_idx(indices) src_boxes = outputs['pred_boxes'][idx] target_boxes = torch.cat([t['boxes'][i] for t, (_, i) in zip(targets, indices)], dim=0) loss_bbox = F.l1_loss(src_boxes, target_boxes, reduction='none') losses = {} losses['loss_bbox'] = loss_bbox.sum() / num_boxes loss_giou = 1 - torch.diag(box_ops.generalized_box_iou( box_ops.box_cxcywh_to_xyxy(src_boxes), box_ops.box_cxcywh_to_xyxy(target_boxes))) losses['loss_giou'] = loss_giou.sum() / num_boxes return losses def loss_masks(self, outputs, targets, indices, num_boxes): """Compute the losses related to the masks: the focal loss and the dice loss. targets dicts must contain the key "masks" containing a tensor of dim [nb_target_boxes, h, w] """ assert "pred_masks" in outputs src_idx = self._get_src_permutation_idx(indices) tgt_idx = self._get_tgt_permutation_idx(indices) src_masks = outputs["pred_masks"] src_masks = src_masks[src_idx] masks = [t["masks"] for t in targets] # TODO use valid to mask invalid areas due to padding in loss target_masks, valid = nested_tensor_from_tensor_list(masks).decompose() target_masks = target_masks.to(src_masks) target_masks = target_masks[tgt_idx] # upsample predictions to the target size src_masks = interpolate(src_masks[:, None], size=target_masks.shape[-2:], mode="bilinear", align_corners=False) src_masks = src_masks[:, 0].flatten(1) target_masks = target_masks.flatten(1) target_masks = target_masks.view(src_masks.shape) losses = { "loss_mask": sigmoid_focal_loss(src_masks, target_masks, num_boxes), "loss_dice": dice_loss(src_masks, target_masks, num_boxes), } return losses def _get_src_permutation_idx(self, indices): # permute predictions following indices batch_idx = torch.cat([torch.full_like(src, i) for i, (src, _) in enumerate(indices)]) src_idx = torch.cat([src for (src, _) in indices]) return batch_idx, src_idx def _get_tgt_permutation_idx(self, indices): # permute targets following indices batch_idx = torch.cat([torch.full_like(tgt, i) for i, (_, tgt) in enumerate(indices)]) tgt_idx = torch.cat([tgt for (_, tgt) in indices]) return batch_idx, tgt_idx def get_loss(self, loss, outputs, targets, indices, num_boxes, **kwargs): loss_map = { 'labels': self.loss_labels, 'cardinality': self.loss_cardinality, 'boxes': self.loss_boxes, 'masks': self.loss_masks } assert loss in loss_map, f'do you really want to compute {loss} loss?' return loss_map[loss](outputs, targets, indices, num_boxes, **kwargs) def forward(self, outputs, targets): """ This performs the loss computation. Parameters: outputs: dict of tensors, see the output specification of the model for the format targets: list of dicts, such that len(targets) == batch_size. The expected keys in each dict depends on the losses applied, see each loss' doc """ outputs_without_aux = {k: v for k, v in outputs.items() if k != 'aux_outputs'} # Retrieve the matching between the outputs of the last layer and the targets indices = self.matcher(outputs_without_aux, targets) # Compute the average number of target boxes accross all nodes, for normalization purposes num_boxes = sum(len(t["labels"]) for t in targets) num_boxes = torch.as_tensor([num_boxes], dtype=torch.float, device=next(iter(outputs.values())).device) if is_dist_avail_and_initialized(): torch.distributed.all_reduce(num_boxes) num_boxes = torch.clamp(num_boxes / get_world_size(), min=1).item() # Compute all the requested losses losses = {} for loss in self.losses: losses.update(self.get_loss(loss, outputs, targets, indices, num_boxes)) # In case of auxiliary losses, we repeat this process with the output of each intermediate layer. if 'aux_outputs' in outputs: for i, aux_outputs in enumerate(outputs['aux_outputs']): indices = self.matcher(aux_outputs, targets) for loss in self.losses: if loss == 'masks': # Intermediate masks losses are too costly to compute, we ignore them. continue kwargs = {} if loss == 'labels': # Logging is enabled only for the last layer kwargs = {'log': False} l_dict = self.get_loss(loss, aux_outputs, targets, indices, num_boxes, **kwargs) l_dict = {k + f'_{i}': v for k, v in l_dict.items()} losses.update(l_dict) return losses class PostProcess(nn.Module): """ This module converts the model's output into the format expected by the coco api""" @torch.no_grad() def forward(self, outputs, target_sizes): """ Perform the computation Parameters: outputs: raw outputs of the model target_sizes: tensor of dimension [batch_size x 2] containing the size of each images of the batch For evaluation, this must be the original image size (before any data augmentation) For visualization, this should be the image size after data augment, but before padding """ out_logits, out_bbox = outputs['pred_logits'], outputs['pred_boxes'] assert len(out_logits) == len(target_sizes) assert target_sizes.shape[1] == 2 prob = F.softmax(out_logits, -1) scores, labels = prob[..., :-1].max(-1) # convert to [x0, y0, x1, y1] format boxes = box_ops.box_cxcywh_to_xyxy(out_bbox) # and from relative [0, 1] to absolute [0, height] coordinates img_h, img_w = target_sizes.unbind(1) scale_fct = torch.stack([img_w, img_h, img_w, img_h], dim=1) boxes = boxes * scale_fct[:, None, :] results = [{'scores': s, 'labels': l, 'boxes': b} for s, l, b in zip(scores, labels, boxes)] return results class MLP(nn.Module): """ Very simple multi-layer perceptron (also called FFN)""" def __init__(self, input_dim, hidden_dim, output_dim, num_layers): super().__init__() self.num_layers = num_layers h = [hidden_dim] * (num_layers - 1) self.layers = nn.ModuleList(nn.Linear(n, k) for n, k in zip([input_dim] + h, h + [output_dim])) def forward(self, x): for i, layer in enumerate(self.layers): x = F.relu(layer(x)) if i < self.num_layers - 1 else layer(x) return x def build(args): # the `num_classes` naming here is somewhat misleading. # it indeed corresponds to `max_obj_id + 1`, where max_obj_id # is the maximum id for a class in your dataset. For example, # COCO has a max_obj_id of 90, so we pass `num_classes` to be 91. # As another example, for a dataset that has a single class with id 1, # you should pass `num_classes` to be 2 (max_obj_id + 1). # For more details on this, check the following discussion # https://github.com/facebookresearch/detr/issues/108#issuecomment-650269223 # num_classes = 20 if args.dataset_file != 'coco' else 91 # if args.dataset_file == "coco_panoptic": # # for panoptic, we just add a num_classes that is large enough to hold # # max_obj_id + 1, but the exact value doesn't really matter # num_classes = 250 num_classes = 5 device = torch.device(args.device) backbone = build_backbone(args) transformer = build_transformer(args) model = DETR( backbone, transformer, num_classes=num_classes, num_queries=args.num_queries, aux_loss=args.aux_loss, ) if args.masks: model = DETRsegm(model, freeze_detr=(args.frozen_weights is not None)) matcher = build_matcher(args) weight_dict = {'loss_ce': 1, 'loss_bbox': args.bbox_loss_coef} weight_dict['loss_giou'] = args.giou_loss_coef if args.masks: weight_dict["loss_mask"] = args.mask_loss_coef weight_dict["loss_dice"] = args.dice_loss_coef # TODO this is a hack if args.aux_loss: aux_weight_dict = {} for i in range(args.dec_layers - 1): aux_weight_dict.update({k + f'_{i}': v for k, v in weight_dict.items()}) weight_dict.update(aux_weight_dict) losses = ['labels', 'boxes', 'cardinality'] if args.masks: losses += ["masks"] criterion = SetCriterion(num_classes, matcher=matcher, weight_dict=weight_dict, eos_coef=args.eos_coef, losses=losses) criterion.to(device) postprocessors = {'bbox': PostProcess()} if args.masks: postprocessors['segm'] = PostProcessSegm() if args.dataset_file == "coco_panoptic": is_thing_map = {i: i <= 90 for i in range(201)} postprocessors["panoptic"] = PostProcessPanoptic(is_thing_map, threshold=0.85) return model, criterion, postprocessorsmain.py

接收参数、训练评估记录等等

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved

import argparse

import datetime

import json

import random

import time

from pathlib import Path

import numpy as np

import torch

from torch.utils.data import DataLoader, DistributedSampler

import datasets

import util.misc as utils

from datasets import build_dataset, get_coco_api_from_dataset

from engine import evaluate, train_one_epoch

from models import build_model

#定义大量的命令行参数

def get_args_parser():

#训练时用到的一些参数,比如批次、学习率等等

parser = argparse.ArgumentParser('Set transformer detector', add_help=False)

parser.add_argument('--lr', default=1e-4, type=float)

parser.add_argument('--lr_backbone', default=1e-5, type=float)

parser.add_argument('--batch_size', default=2, type=int)

parser.add_argument('--weight_decay', default=1e-4, type=float)

parser.add_argument('--epochs', default=300, type=int)

parser.add_argument('--lr_drop', default=200, type=int)

parser.add_argument('--clip_max_norm', default=0.1, type=float,

help='gradient clipping max norm')

# Model parameters

parser.add_argument('--frozen_weights', type=str, default=None,

help="Path to the pretrained model. If set, only the mask head will be trained")

# * Backbone

parser.add_argument('--backbone', default='resnet50', type=str,

help="Name of the convolutional backbone to use")

parser.add_argument('--dilation', action='store_true',

help="If true, we replace stride with dilation in the last convolutional block (DC5)")

parser.add_argument('--position_embedding', default='learned', type=str, choices=('sine', 'learned'),

help="Type of positional embedding to use on top of the image features")

# * Transformer

parser.add_argument('--enc_layers', default=6, type=int,

help="Number of encoding layers in the transformer")

parser.add_argument('--dec_layers', default=6, type=int,

help="Number of decoding layers in the transformer")

parser.add_argument('--dim_feedforward', default=2048, type=int,

help="Intermediate size of the feedforward layers in the transformer blocks")

parser.add_argument('--hidden_dim', default=256, type=int,

help="Size of the embeddings (dimension of the transformer)")

parser.add_argument('--dropout', default=0.1, type=float,

help="Dropout applied in the transformer")

parser.add_argument('--nheads', default=8, type=int,

help="Number of attention heads inside the transformer's attentions")

parser.add_argument('--num_queries', default=100, type=int,

help="Number of query slots")

parser.add_argument('--pre_norm', action='store_true')

# * Segmentation

parser.add_argument('--masks', action='store_true',

help="Train segmentation head if the flag is provided")

# Loss

parser.add_argument('--no_aux_loss', dest='aux_loss', action='store_false',

help="Disables auxiliary decoding losses (loss at each layer)")

# * Matcher

parser.add_argument('--set_cost_class', default=1, type=float,

help="Class coefficient in the matching cost")

parser.add_argument('--set_cost_bbox', default=5, type=float,

help="L1 box coefficient in the matching cost")

parser.add_argument('--set_cost_giou', default=2, type=float,

help="giou box coefficient in the matching cost")

# * Loss coefficients

parser.add_argument('--mask_loss_coef', default=1, type=float)

parser.add_argument('--dice_loss_coef', default=1, type=float)

parser.add_argument('--bbox_loss_coef', default=5, type=float)

parser.add_argument('--giou_loss_coef', default=2, type=float)

parser.add_argument('--eos_coef', default=0.1, type=float,

help="Relative classification weight of the no-object class")

# dataset parameters

parser.add_argument('--dataset_file', default='coco')

parser.add_argument('--coco_path', type=str, default='data/cylinder_project/inside-imgs-bottom/images')

parser.add_argument('--coco_panoptic_path', type=str)

parser.add_argument('--remove_difficult', action='store_true')

parser.add_argument('--output_dir', default='/data/detr-main/outputs',

help='path where to save, empty for no saving')

parser.add_argument('--device', default='cpu',

help='device to use for training / testing')

parser.add_argument('--seed', default=42, type=int)

parser.add_argument('--resume', default='detr-r50-dc5.pth', help='resume from checkpoint')

parser.add_argument('--start_epoch', default=0, type=int, metavar='N',

help='start epoch')

parser.add_argument('--eval', action='store_true')

parser.add_argument('--num_workers', default=2, type=int)

# distributed training parameters

parser.add_argument('--world_size', default=1, type=int,

help='number of distributed processes')

parser.add_argument('--dist_url', default='env://', help='url used to set up distributed training')

return parser

def main(args):

#初始化分布式训练环境

utils.init_distributed_mode(args)

print("git:\n {}\n".format(utils.get_sha()))

#检查是否用于分割任务,冻结训练仅适用于分割任务

if args.frozen_weights is not None:

assert args.masks, "Frozen training is meant for segmentation only"

print(args)

#根据命令行参数选择使用cpu/gpu

device = torch.device(args.device)

#设置随机种子以确保实验的可复现性

# fix the seed for reproducibility

seed = args.seed + utils.get_rank()

torch.manual_seed(seed)

np.random.seed(seed)

random.seed(seed)

#构建模型

model, criterion, postprocessors = build_model(args)

model.to(device)

#如果使用分布式训练,则使用DistributedDataParallel包装模型

#就是把任务分开给不同的cpu/gpu,参数默认是1, 关闭的(解析后不是1)

model_without_ddp = model

if args.distributed:

model = torch.nn.parallel.DistributedDataParallel(model, device_ids=[args.gpu])

model_without_ddp = model.module

#统计并打印模型中可训练参数的数量

n_parameters = sum(p.numel() for p in model.parameters() if p.requires_grad)

print('number of params:', n_parameters)

#优化器

#参数分组,主干和非主干

param_dicts = [

{"params": [p for n, p in model_without_ddp.named_parameters() if "backbone" not in n and p.requires_grad]},

{

"params": [p for n, p in model_without_ddp.named_parameters() if "backbone" in n and p.requires_grad],

"lr": args.lr_backbone,

},

]

#定义优化器AdamW

optimizer = torch.optim.AdamW(param_dicts, lr=args.lr,

weight_decay=args.weight_decay) #使用权重衰减

#定义学习率调度器,在不同阶段采用不同的学习率

lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer, args.lr_drop)

#构建数据集

dataset_train = build_dataset(image_set='train', args=args)

dataset_val = build_dataset(image_set='val', args=args)

#根据是否分布式训练,构建分布式采样器

if args.distributed:

sampler_train = DistributedSampler(dataset_train) #分布式采样器

sampler_val = DistributedSampler(dataset_val, shuffle=False)

else:

sampler_train = torch.utils.data.RandomSampler(dataset_train) #随机采样器,就相当于把数据打乱了

sampler_val = torch.utils.data.SequentialSampler(dataset_val)

#构建批处理采样器,这个是用于后续的训练

batch_sampler_train = torch.utils.data.BatchSampler(

sampler_train, args.batch_size, drop_last=True)

data_loader_train = DataLoader(dataset_train, batch_sampler=batch_sampler_train,

collate_fn=utils.collate_fn, num_workers=args.num_workers)

data_loader_val = DataLoader(dataset_val, args.batch_size, sampler=sampler_val,

drop_last=False, collate_fn=utils.collate_fn, num_workers=args.num_workers)

#这个是判断是不是分割任务

if args.dataset_file == "coco_panoptic":

# We also evaluate AP during panoptic training, on original coco DS

coco_val = datasets.coco.build("val", args)

base_ds = get_coco_api_from_dataset(coco_val)

else:

base_ds = get_coco_api_from_dataset(dataset_val)

#加载预训练权重

if args.frozen_weights is not None:

checkpoint = torch.load(args.frozen_weights, map_location='cpu')

model_without_ddp.detr.load_state_dict(checkpoint['model'])

#

output_dir = Path(args.output_dir)

if args.resume:

if args.resume.startswith('https'):

checkpoint = torch.hub.load_state_dict_from_url(

args.resume, map_location='cpu', check_hash=True)

else:

checkpoint = torch.load(args.resume, map_location='cpu')

model_without_ddp.load_state_dict(checkpoint['model'])

if not args.eval and 'optimizer' in checkpoint and 'lr_scheduler' in checkpoint and 'epoch' in checkpoint:

optimizer.load_state_dict(checkpoint['optimizer'])

lr_scheduler.load_state_dict(checkpoint['lr_scheduler'])

args.start_epoch = checkpoint['epoch'] + 1

#如果eval参数为真,就只进行评估,不进行训练

if args.eval:

test_stats, coco_evaluator = evaluate(model, criterion, postprocessors,

data_loader_val, base_ds, device, args.output_dir)

if args.output_dir:

utils.save_on_master(coco_evaluator.coco_eval["bbox"].eval, output_dir / "eval.pth")

return

#开始训练

print("Start training")

#记录训练时间

start_time = time.time()

for epoch in range(args.start_epoch, args.epochs):

if args.distributed:

sampler_train.set_epoch(epoch)

train_stats = train_one_epoch(

model, criterion, data_loader_train, optimizer, device, epoch,

args.clip_max_norm)

lr_scheduler.step()

#保存检查点

if args.output_dir:

checkpoint_paths = [output_dir / 'checkpoint.pth']

# extra checkpoint before LR drop and every 100 epochs

if (epoch + 1) % args.lr_drop == 0 or (epoch + 1) % 100 == 0:

checkpoint_paths.append(output_dir / f'checkpoint{epoch:04}.pth')

for checkpoint_path in checkpoint_paths:

utils.save_on_master({

'model': model_without_ddp.state_dict(),

'optimizer': optimizer.state_dict(),

'lr_scheduler': lr_scheduler.state_dict(),

'epoch': epoch,

'args': args,

}, checkpoint_path)

#进行评估

test_stats, coco_evaluator = evaluate(

model, criterion, postprocessors, data_loader_val, base_ds, device, args.output_dir

)

#记录日志

log_stats = {**{f'train_{k}': v for k, v in train_stats.items()},

**{f'test_{k}': v for k, v in test_stats.items()},

'epoch': epoch,

'n_parameters': n_parameters}

if args.output_dir and utils.is_main_process():

with (output_dir / "log.txt").open("a") as f:

f.write(json.dumps(log_stats) + "\n")

# for evaluation logs

if coco_evaluator is not None:

(output_dir / 'eval').mkdir(exist_ok=True)

if "bbox" in coco_evaluator.coco_eval:

filenames = ['latest.pth']

if epoch % 50 == 0:

filenames.append(f'{epoch:03}.pth')

for name in filenames:

torch.save(coco_evaluator.coco_eval["bbox"].eval,

output_dir / "eval" / name)

total_time = time.time() - start_time

total_time_str = str(datetime.timedelta(seconds=int(total_time)))

print('Training time {}'.format(total_time_str))

if __name__ == '__main__':

parser = argparse.ArgumentParser('DETR training and evaluation script', parents=[get_args_parser()])

args = parser.parse_args()

if args.output_dir:

Path(args.output_dir).mkdir(parents=True, exist_ok=True)

main(args)